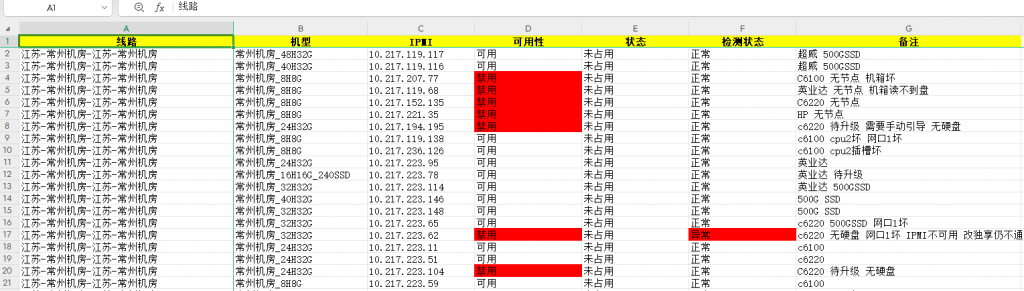

[爬虫]爬取炎黄云后台未分配的库存信息(含图片验证码识别)

要求:

1、爬取常州机房的库存

2、爬取镇江机房的库存

代码(使用selenium爬取慢,对于没有反爬机制的网站可以使用bs4爬取):

#单 位:常州旺龙

#作 者:OLDNI

#开发日期:2023/10/24

"""

爬取炎黄云库存未占用数据

写入excel表格

"""

import os

import time

from bs4 import BeautifulSoup

from ddddocr import DdddOcr

from openpyxl import Workbook

from openpyxl.styles import Font, PatternFill, Alignment

from selenium import webdriver

from selenium.webdriver.common.by import By

start_time=time.time()

#登录网站,失败会重新登录

def browser_login():

#创建浏览器

options=webdriver.ChromeOptions()

options.add_argument('--headless')

options.add_experimental_option('detach',True)

options.add_argument('--disable-blink-features=AutomationControlled')

# 隐藏"正在受到自动软件的控制"这几个字

options.add_experimental_option("excludeSwitches", ["enable-automation"])

options.add_experimental_option('useAutomationExtension', False)

#需要设置为全局变量,不然后面的就无法使用browser了

global browser

browser=webdriver.Chrome(options=options)

browser.implicitly_wait(10)

browser.get('http://w.yhyun.com/index.php/meihao2021/Login/index.html')

browser.find_element(By.CSS_SELECTOR,'.login_input.login_user.defaultinput').send_keys('13685234796')

browser.find_element(By.CSS_SELECTOR,'.login_input.login_password').send_keys('www.123.nyc')

#验证码识别

ocr=DdddOcr()

img_tag=browser.find_element(By.CSS_SELECTOR,'.show-captcha')

img_tag.screenshot('code.png')

with open('code.png','rb') as f:

img_content=f.read()

result=ocr.classification(img_content)

browser.find_element(By.CSS_SELECTOR,'.login_input.login_verify.defaultinput').send_keys(result)

browser.get_screenshot_as_file('firstpage.png')

browser.find_element(By.CSS_SELECTOR,'.login_submit').click()

#登录前的地址http://w.yhyun.com/index.php/meihao2021/Login/index.html

print(browser.current_url)

time.sleep(5)

#登录后的地址http://w.yhyun.com/index.php/meihao2021/Index/index.html

print(browser.current_url)

#判断是否登录成功,不成功重新登录

if browser.current_url != 'http://w.yhyun.com/index.php/meihao2021/Index/index.html':

browser.quit()

print('登录失败,正重新运行程序!')

browser_login()

#调用函数

browser_login()

#爬取常州和镇江的库存(未占用),打开库存100条

url_list=[

#常州

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1751347433298678&cabinetId=&status=0&check_status=&enable=',

#镇江一区-87

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1702229829009379&cabinetId=&status=0&check_status=&enable=',

#镇江高防-141

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1760593526228195&cabinetId=&status=0&check_status=&enable=',

#镇江三区-101

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1731241996033164&cabinetId=&status=0&check_status=&enable=',

#镇江高防-119

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1735118078781802&cabinetId=&status=0&check_status=&enable=',

#镇江高防-135

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1790051111311838&cabinetId=&status=0&check_status=&enable=',

]

#最后汇总

statistics=[]

# 创建excel表

wb = Workbook()

sheet = wb.active

header = ['线路', '机型', 'IPMI', '可用性', '状态', '检测状态', '备注', ]

sheet.append(header)

for url in url_list:

new_tab=f'window.open("{url}")'

browser.execute_script(new_tab)

browser.switch_to.window(browser.window_handles[-1])

#判断库存有多少页

html=browser.page_source

soup=BeautifulSoup(html,'html.parser')

try:

#从第二页开始标签类的名称都是num,所以要指定最后一个num为所有页数

pages=int(soup.select_one('.num:last-child').text)

except:

pages=1

print(f'当前线路共有{pages}页')

# 计算库存总条数

total = 0

for p in range(1,(pages+1)):

#找到当页所有tr标签元素,一条tr对应一条库存

tr_tag_list=soup.select('#server_list_table>tbody>tr')

print(f'库存条数:{len(tr_tag_list)}')

#计算库存总条数

total+=len(tr_tag_list)

for tr_tag in tr_tag_list:

line=tr_tag.select_one('td:nth-child(2)').text.strip()

type=tr_tag.select_one('td:nth-child(3)').text.strip()

IPMI=tr_tag.select_one('td:nth-child(5)').text.strip()

available=tr_tag.select_one('td:nth-child(6)').text.strip()

status=tr_tag.select_one('td:nth-child(7)').text.strip()

check_status=tr_tag.select_one('td:nth-child(9)').text.strip()

notes=tr_tag.select_one('td:nth-child(10)').text.strip()

data=[line,type,IPMI,available,status,check_status,notes]

sheet.append(data)

print(f'已处理第{p}页数据')

#如果只有一页就不需要点击下一页,如果最后一页也不点击下一页

if pages!=1 or p!=pages:

browser.find_element(By.CSS_SELECTOR,'tbody > tr > td.right > div > table > tbody td:nth-child(4)').click()

html = browser.page_source

soup = BeautifulSoup(html, 'html.parser')

statistics.append(f'{line}库存服务器数:{total}')

browser.close()

browser.switch_to.window(browser.window_handles[0])

browser.close()

sheet.append(statistics)

"""

设置表格样式

"""

#设置列宽

column_number_and_dimensions={'A':40,'B':25,'C':20,'D':20,'E':20,'F':20,'G':42,}

for n,d in column_number_and_dimensions.items():

sheet.column_dimensions[n].width = d

#冻结窗格

sheet.freeze_panes='B2'

#设置表头样式

cells=sheet[1]

font=Font(bold=True)

patternfill=PatternFill(fill_type='solid',fgColor='FFFF00')

alignment=Alignment(horizontal='center')

for cell in cells:

cell.font=font

cell.fill=patternfill

cell.alignment=alignment

#D列内容凡是"禁用"就填充红色(解决条件格式问题)

patternfill=PatternFill(fill_type='solid',fgColor='FF0000')

cells=sheet['D']

for cell in cells:

if cell.value=='禁用':

cell.fill=patternfill

cells=sheet['F']

for cell in cells:

if cell.value=='异常':

cell.fill=patternfill

wb.save('鸟云平台库存数据.xlsx')

end_time=time.time()

#round函数保留浮点数的几位

executtion_time=round((end_time-start_time),2)

print(f'脚本运行时长:{executtion_time}秒')

os.startfile('鸟云平台库存数据.xlsx')bs4方法爬取:

#单 位:常州旺龙

#作 者:OLDNI

#开发日期:2024/2/19

"""

爬取炎黄云库存未占用数据(非chrom,而是使用bs4)

写入excel表格

"""

import os

import re

import time

import requests

from bs4 import BeautifulSoup

from openpyxl import Workbook

from openpyxl.styles import Font, PatternFill, Alignment

start_time=time.time()

#爬取常州和镇江的库存(未占用),打开库存100条

url_list=[

#常州

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1751347433298678&cabinetId=&status=0&check_status=&enable=',

#镇江一区-87

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1702229829009379&cabinetId=&status=0&check_status=&enable=',

#镇江高防-141

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1760593526228195&cabinetId=&status=0&check_status=&enable=',

#镇江三区-101

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1731241996033164&cabinetId=&status=0&check_status=&enable=',

#镇江高防-119

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1735118078781802&cabinetId=&status=0&check_status=&enable=',

#镇江高防-135

'http://w.yhyun.com/index.php/meihao2021/BaremetalStock/index.html?keywordType=id&keyword=&page=1&pagesize=100&lineId=1790051111311838&cabinetId=&status=0&check_status=&enable=',

]

headers={

#这里的cookie是已经登录帐户的,时前长了就会到期,要更换

'Cookie':'PHPSESSID=e53pqbra071u9di5gbj1usk3s3; 116yun__referer__=L2luZGV4LnBocC9tZWloYW8yMDIxL2JhcmVtZXRhbFN0b2NrL2luZGV4; 116yunzkeys_language=zh-CN',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36 Edg/121.0.0.0',

}

#最后汇总

statistics=[]

# 创建excel表

wb = Workbook()

sheet = wb.active

header = ['线路', '机型', 'IPMI', '可用性', '状态', '检测状态', '备注', ]

sheet.append(header)

for url in url_list:

resp = requests.get(url, headers=headers)

soup = BeautifulSoup(resp.text, 'html.parser')

try:

#从第二页开始标签类的名称都是num,所以要指定最后一个num为所有页数

pages=int(soup.select_one('.num:last-child').text)

except:

pages=1

print('====================================')

print(f'当前线路共有{pages}页')

# 计算库存总条数

total = 0

for p in range(1,(pages+1)):

#找到当页所有tr标签元素,一条tr对应一条库存

tr_tag_list=soup.select('#server_list_table>tbody>tr')

print(f'第{p}页库存条数:{len(tr_tag_list)}')

#计算库存总条数

total+=len(tr_tag_list)

for tr_tag in tr_tag_list:

line=tr_tag.select_one('td:nth-child(2)').text.strip()

type=tr_tag.select_one('td:nth-child(3)').text.strip()

IPMI=tr_tag.select_one('td:nth-child(5)').text.strip()

available=tr_tag.select_one('td:nth-child(6)').text.strip()

status=tr_tag.select_one('td:nth-child(7)').text.strip()

check_status=tr_tag.select_one('td:nth-child(9)').text.strip()

notes=tr_tag.select_one('td:nth-child(10)').text.strip()

data=[line,type,IPMI,available,status,check_status,notes]

sheet.append(data)

print(f'已处理第{p}页数据')

# 处理页数url

lst = re.split('page=|&pagesize', url)

new_url = lst[0] + 'page=' + str(p+1) + '&pagesize' + lst[2]

resp = requests.get(new_url, headers=headers)

soup = BeautifulSoup(resp.text, 'html.parser')

sheet.append(statistics)

"""

设置表格样式

"""

#设置列宽

column_number_and_dimensions={'A':40,'B':25,'C':20,'D':20,'E':20,'F':20,'G':42,}

for n,d in column_number_and_dimensions.items():

sheet.column_dimensions[n].width = d

#冻结窗格

sheet.freeze_panes='B2'

#设置表头样式

cells=sheet[1]

font=Font(bold=True)

patternfill=PatternFill(fill_type='solid',fgColor='FFFF00')

alignment=Alignment(horizontal='center')

for cell in cells:

cell.font=font

cell.fill=patternfill

cell.alignment=alignment

#D列内容凡是"禁用"就填充红色(解决条件格式问题)

patternfill=PatternFill(fill_type='solid',fgColor='FF0000')

cells=sheet['D']

for cell in cells:

if cell.value=='禁用':

cell.fill=patternfill

cells=sheet['F']

for cell in cells:

if cell.value=='异常':

cell.fill=patternfill

wb.save('鸟云平台库存数据.xlsx')

end_time=time.time()

#round函数保留浮点数的几位

executtion_time=round((end_time-start_time),2)

print(f'脚本运行时长:{executtion_time}秒')

os.startfile('鸟云平台库存数据.xlsx')效果: