openstack Train版本集群部署(命令集)

参考笔记本:扩展:openstack train 版本安装

环境:

103.73.119.7 controller ens33 C7.9

103.73.119.8 compute1 ens33 C7.9

103.73.119.9 compute2 ens33 C7.91、修改主机名

hostnamectl set-hostname controller

echo www.123.nyc|passwd --stdin roothostnamectl set-hostname compute1

echo www.123.nyc|passwd --stdin roothostnamectl set-hostname compute2

echo www.123.nyc|passwd --stdin roothostnamectl set-hostname compute3

echo www.123.nyc|passwd --stdin root2、编辑/etc/hosts 文件,并生成密钥做免密登录 ,再分发到各节点(控制节点)

#注意:如果有内网需要内网IP解析

cat >>/etc/hosts<<EOF

192.168.73.7 controller

192.168.73.8 compute1

192.168.73.9 compute2

EOF

#如果已经生成密钥会提示覆盖,没有则直接生成无交互

ssh-keygen -N '' -f ~/.ssh/id_rsa

for i in `awk '{print $2}' /etc/hosts|sed -n '3,$'p`;do ssh-copy-id $i;donefor i in `awk '{print $2}' /etc/hosts|sed -n '3,$'p`;do scp /etc/hosts ${i}:/etc;done一、控制节+计算节点都操作命令

#安装常用软件

yum install -y wget vim bash-completion lrzsz net-tools nfs-utils yum-utils rdate ntpdate

#关闭防火墙和NetworkManager

systemctl disable --now firewalld NetworkManager.service

#关闭SELINUX

sed -i 's/SELINUX=enforcing$/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

#同步时间

ntpdate 61.160.213.184

clock -w

echo "0 */1 * * * /usr/sbin/ntpdate 61.160.213.184 &> /dev/null" >> /var/spool/cron/root

#优化提示符

PS1="\[\e[1;32m\][\t \[\e[1;33m\]\u\[\e[35m\]@\h\[\e[1;31m\] \W\[\e[1;32m\]]\[\e[0m\]\\$"

echo 'PS1="\[\e[1;32m\][\t \[\e[1;33m\]\u\[\e[35m\]@\h\[\e[1;31m\] \W\[\e[1;32m\]]\[\e[0m\]\\$"' >>/etc/profile

#优化历史记录显示

echo 'export HISTTIMEFORMAT="%F %T `whoami` "' >>/etc/bashrc

#保持长时间登录不掉线

echo -e "ClientAliveInterval 30 \nClientAliveCountMax 86400" >>/etc/ssh/sshd_config

#解决远程卡慢的问题

sed -i '/UseDNS/a UseDNS no' /etc/ssh/sshd_config

systemctl restart sshd

#修改端口

#sed -i '/#Port 22/a Port 52113' /etc/ssh/sshd_config

#下载阿里源(切记不需要epel源)

mkdir /etc/yum.repos.d/bak

\mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

#去掉阿里云专用源

sed -ri 's@(.*aliyuncs)@#\1@g' /etc/yum.repos.d/CentOS-Base.repo

yum clean all

yum makecache

#设置同一时间可以打开的文件数为65535

ulimit -n 65535

cat >>/etc/security/limits.conf<<EOF

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

EOF

#安装openstack train版源

yum install centos-release-openstack-train -y

#虚拟化硬件加速

modprobe kvm-intel

reboot二、控制节点操作命令

注:一定要使用secureCRT登录操作,使用的putty远程粘贴不全,执行会有问题

cd ~

cat >install_openstack_train_controller.sh<<'NYC'

#######手动修改下面的值[密码传参(openssl rand -hex 10命令生成密码)]###############

#创建rabbbit用户openstack的密码

rabbit_openstack_pw=8fb42ce73ae741692e03

#控制节点公网IP地址(内外网时写内网IP,安装好后再修改httpd的配置文件ServerName为域名或公网IP地址)

controller_ip=192.168.73.7

#控制节点内网IP地址

controller_provider_ip=192.168.73.7

#控制节点网卡时(写公网网卡名,用于桥接公网的)

controller_net_interf_name=ens33

#各组件创建数据库的用户密码

openstack_mysql_user_pw=7a41d5a25691d498e3f8

#各组件的用户的密码

openstack_user_pw=c32562d2d221f62d7061

#######手动修改上面的值##########################################################

yum install -y python2-openstackclient openstack-selinux

yum install mariadb mariadb-server python2-PyMySQL memcached -y

yum install rabbitmq-server -y

yum install -y openstack-keystone httpd mod_wsgi openstack-glance openstack-placement-api

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-console -y

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables ipset iproute -y

yum install openstack-dashboard -y

#cinder服务

#yum install l openstack-cinder -y

yum -y install libibverbs

systemctl enable --now rabbitmq-server.service

#创建rabbitMQ用户,记住密码

rabbitmqctl add_user openstack $rabbit_openstack_pw

rabbitmqctl set_user_tags openstack administrator

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

rabbitmq-plugins enable rabbitmq_management

#不让web端可见可以关闭此功能

#rabbitmq-plugins disable rabbitmq_management

\cp /etc/sysconfig/memcached{,.bak}

cat >/etc/sysconfig/memcached<<'EOF'

PORT="11211"

USER="memcached"

MAXCONN="65535"

CACHESIZE="1024"

OPTIONS="-l 127.0.0.1,::1,controller"

EOF

systemctl enable --now memcached.service

\cp /etc/my.cnf.d/mariadb-server.cnf{,.bak}

sed -i "/^\[mysqld\]/a bind-address = ${controller_ip}\ndefault-storage-engine = innodb\ninnodb_file_per_table = on\nmax_connections = 4096\ncollation-server = utf8_general_ci\ncharacter-set-server = utf8" /etc/my.cnf.d/mariadb-server.cnf

systemctl enable --now mariadb.service

mysql -uroot -e 'create database keystone;'

mysql -uroot -e 'create database glance;'

mysql -uroot -e 'create database nova;'

mysql -uroot -e 'create database nova_api;'

mysql -uroot -e 'create database nova_cell0;'

mysql -uroot -e 'create database neutron;'

mysql -uroot -e 'create database cinder;'

mysql -uroot -e 'create database placement;'

mysql -uroot -e "grant all privileges on keystone.* to 'keystone'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on keystone.* to 'keystone'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on glance.* to 'glance'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on glance.* to 'glance'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova.* to 'nova'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova.* to 'nova'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova_api.* to 'nova'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova_api.* to 'nova'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova_cell0.* to 'nova'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on nova_cell0.* to 'nova'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on neutron.* to 'neutron'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on neutron.* to 'neutron'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on cinder.* to 'cinder'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on cinder.* to 'cinder'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on placement.* to 'placement'@'localhost' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e "grant all privileges on placement.* to 'placement'@'%' identified by '7a41d5a25691d498e3f8';"

mysql -uroot -e 'flush privileges;'

\cp /etc/keystone/keystone.conf{,.bak}

sed -i "/^\[database\]/a connection = mysql+pymysql://keystone:${openstack_mysql_user_pw}@controller/keystone" /etc/keystone/keystone.conf

sed -i '/^\[token\]/a provider = fernet' /etc/keystone/keystone.conf

su -s /bin/sh -c "keystone-manage db_sync" keystone

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

keystone-manage bootstrap --bootstrap-password openstack --bootstrap-admin-url http://controller:5000/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

sed -i "/#ServerName/a ServerName $controller_ip" /etc/httpd/conf/httpd.conf

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

systemctl enable --now httpd

cd ~

cat >admin-openrc <<EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=openstack

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

export OS_VOLUME_API_VERSION=2

EOF

source admin-openrc

##################有执行问题#############################

openstack project create --domain default --description "Service Project" service

#################################################################################

openstack role create user

openstack user create --domain default --password $openstack_user_pw glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

#控制节点glance配置

sed -i "/^\[database\]/a connection = mysql+pymysql://glance:${openstack_mysql_user_pw}@controller/glance" /etc/glance/glance-api.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_uri = http://controller:5000\nauth_url = http://controller:5000\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = Default\nuser_domain_name = Default\nproject_name = service\nusername = glance\npassword = $openstack_user_pw" /etc/glance/glance-api.conf

sed -i '/^\[paste_deploy\]/a flavor = keystone' /etc/glance/glance-api.conf

sed -i '/^\[glance_store\]/a stores = file,http\ndefault_store = file\nfilesystem_store_datadir = /var/lib/glance/images/' /etc/glance/glance-api.conf

su -s /bin/sh -c "glance-manage db_sync" glance

systemctl enable --now openstack-glance-api.service

cd ~

wget --http-user=qwe --http-passwd=qwe http://61.160.213.184/dl/centos/openstack/small.img

glance image-create --name redhat6.5 --disk-format qcow2 --container-format bare --progress < small.img

openstack user create --domain default --password $openstack_user_pw placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

#控制节点placement配置

sed -i "/^\[placement_database\]/a connection = mysql+pymysql://placement:${openstack_mysql_user_pw}@controller/placement" /etc/placement/placement.conf

sed -i '/^\[api\]/a auth_strategy = keystone' /etc/placement/placement.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000/v3\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = Default\nuser_domain_name = Default\nproject_name = service\nusername = placement\npassword = $openstack_user_pw" /etc/placement/placement.conf

su -s /bin/sh -c "placement-manage db sync" placement

#systemctl restart httpd

openstack user create --domain default --password $openstack_user_pw nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

#控制节点nova配置

\cp -a /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

sed -i "/^\[DEFAULT\]/a enabled_apis = osapi_compute,metadata\nmy_ip = ${controller_ip}\ntransport_url = rabbit://openstack:${rabbit_openstack_pw}@controller:5672/\nauth_strategy=keystone\nblock_device_allocate_retries = 600\nallow_resize_to_same_host=true\nuse_neutron = true\nfirewall_driver = nova.virt.firewall.NoopFirewallDriver" /etc/nova/nova.conf

sed -i "/^\[api_database\]/a connection = mysql+pymysql://nova:${openstack_mysql_user_pw}@controller/nova_api" /etc/nova/nova.conf

sed -i "/^\[database\]/a connection = mysql+pymysql://nova:${openstack_mysql_user_pw}@controller/nova" /etc/nova/nova.conf

sed -i '/^\[api\]/a auth_strategy = keystone' /etc/nova/nova.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000/v3\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = nova\npassword = ${openstack_user_pw}" /etc/nova/nova.conf

#追加内容,解决调整大小与迁移的问题

sed -i "/^\[oslo_messaging_rabbit\]/a rabbit_host=127.0.0.1\nrabbit_port=5672\nrabbit_userid=openstack\nrabbit_password=${rabbit_openstack_pw}" /etc/nova/nova.conf

sed -i '/^\[vnc\]/a enabled = true\nserver_listen = $my_ip\nserver_proxyclient_address = $my_ip' /etc/nova/nova.conf

sed -i '/^\[glance\]/a api_servers = http://controller:9292' /etc/nova/nova.conf

sed -i '/^\[oslo_concurrency\]/a lock_path = /var/lib/nova/tmp' /etc/nova/nova.conf

sed -i "/^\[placement\]/a os_region_name = RegionOne\nproject_domain_name = Default\nproject_name = service\nauth_type = password\nuser_domain_name = Default\nauth_url = http://controller:5000/v3\nusername = placement\npassword = ${openstack_user_pw}" /etc/nova/nova.conf

cp /etc/httpd/conf.d/00-placement-api.conf{,.bak}

cat >>/etc/httpd/conf.d/00-placement-api.conf<<EOF

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

EOF

#systemctl restart httpd

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

systemctl enable --now openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service openstack-nova-console.service

#等计算节点配置好后再执行此命令,添加计算节点

#su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

############################################################################

openstack user create --domain default --password $openstack_user_pw neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

#控制节点neutron配置

\cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

sed -i "/^\[DEFAULT\]/a core_plugin = ml2\nservice_plugins = router\nallow_overlapping_ips = true\ntransport_url = rabbit://openstack:${rabbit_openstack_pw}@controller\nauth_strategy = keystone\nnotify_nova_on_port_status_changes = true\nnotify_nova_on_port_data_changes = true" /etc/neutron/neutron.conf

sed -i "/^\[database\]/a connection = mysql+pymysql://neutron:${openstack_mysql_user_pw}@controller/neutron" /etc/neutron/neutron.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = neutron\npassword = ${openstack_user_pw}" /etc/neutron/neutron.conf

sed -i '/^\[oslo_concurrency\]/a lock_path = /var/lib/neutron/tmp' /etc/neutron/neutron.conf

cat >>/etc/neutron/neutron.conf<<EOF

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = ${openstack_user_pw}

EOF

\cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

cat >>/etc/neutron/plugins/ml2/ml2_conf.ini<<EOF

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

#type_drivers = flat,vlan

#tenant_network_types =

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

EOF

\cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

cat >>/etc/neutron/plugins/ml2/linuxbridge_agent.ini<<EOF

[linux_bridge]

physical_interface_mappings = provider:$controller_net_interf_name

[vxlan]

#enable_vxlan = false

enable_vxlan = true

local_ip = $controller_provider_ip

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

EOF

\cp /etc/neutron/l3_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

#增加了对3层网络的配置

sed -i '/^\[DEFAULT\]/a #interface_driver = linuxbridge\ninterface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver\nexternal_network_bridge =' /etc/neutron/l3_agent.ini

\cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

sed -i '/^\[DEFAULT\]/a interface_driver = linuxbridge\ndhcp_driver = neutron.agent.linux.dhcp.Dnsmasq\nenable_isolated_metadata = true' /etc/neutron/dhcp_agent.ini

\cp /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

sed -i '/^\[DEFAULT\]/a nova_metadata_host = controller\nmetadata_proxy_shared_secret = METADATA_SECRET' /etc/neutron/metadata_agent.ini

#修改/etc/nova/nova.conf(注意METADATA_SECRET要和上面的对应)

sed -i "/^\[neutron\]/a url = http://controller:9696\nauth_url = http://controller:5000\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nregion_name = RegionOne\nproject_name = service\nusername = neutron\npassword = ${openstack_user_pw}\nservice_metadata_proxy = true\nmetadata_proxy_shared_secret = METADATA_SECRET" /etc/nova/nova.conf

cat >>/etc/sysctl.conf<<EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

#加载模块

modprobe br_netfilter

sysctl -p

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

systemctl restart openstack-nova-api.service

systemctl enable --now neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service

##控制节点horizion配置

\cp /etc/openstack-dashboard/local_settings{,.bak}

egrep -v '^$|#' /etc/openstack-dashboard/local_settings.bak >/etc/openstack-dashboard/local_settings

sed -i '/OPENSTACK_HOST =/c OPENSTACK_HOST = "controller"' /etc/openstack-dashboard/local_settings

sed -i "/ALLOWED_HOSTS =/c ALLOWED_HOSTS = ['*', ]" /etc/openstack-dashboard/local_settings

sed -i "/ALLOWED_HOSTS =/a SESSION_ENGINE = 'django.contrib.sessions.backends.cache'" /etc/openstack-dashboard/local_settings

cat >>/etc/openstack-dashboard/local_settings<<'EOF'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

EOF

sed -i '/OPENSTACK_KEYSTONE_URL/a OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True' /etc/openstack-dashboard/local_settings

cat >>/etc/openstack-dashboard/local_settings<<'EOF'

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

EOF

cat >>/etc/openstack-dashboard/local_settings<<'EOF'

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

WEBROOT = '/dashboard/'

EOF

cd /usr/share/openstack-dashboard

python manage.py make_web_conf --apache > /etc/httpd/conf.d/openstack-dashboard.conf

ln -s /etc/openstack-dashboard /usr/share/openstack-dashboard/openstack_dashboard/conf

\cp /etc/httpd/conf.d/openstack-dashboard.conf{,.bak}

sed -ri 's#(.*WSGIScriptAlias ).*$#\1 /dashboard /usr/share/openstack-dashboard/openstack_dashboard/wsgi/django.wsgi#g' /etc/httpd/conf.d/openstack-dashboard.conf

sed -i '/Alias \/static/c \ \ \ \ Alias /dashboard/static /usr/share/openstack-dashboard/static' /etc/httpd/conf.d/openstack-dashboard.conf

systemctl restart httpd.service memcached.service

NYC#修改内容再执行

bash install_openstack_train_controller.sh#修改/etc/httpd/conf/httpd.conf的ServerName为公网IP地址

sed -i '/^ServerName/c ServerName 103.73.119.7' /etc/httpd/conf/httpd.conf

systemctl restart httpd#数据库初始化设置

mysql_secure_installation三、计算节点操作命令

cd ~

cat >install_openstack_train_compute.sh<<'NYC'

#read -p "please input this host IP address:" compute_ip

#read -p "please input this host interface name:" compute_net_interf_name

#######手动修改下面的值[密码传参(openssl rand -hex 10命令生成密码)]###############

#创建rabbbit用户openstack的密码

rabbit_openstack_pw=8fb42ce73ae741692e03

#控制节点公网IP地址(单公网则写公网IP;内外网也要写公网IP)

controller_ip=103.73.119.7

#计算节点公网IP地址(单公网则写公网IP;内外网也要写内网IP)

compute_ip=192.168.73.8

#计算节点内网IP地址

compute_provider_ip=192.168.73.8

#计算节点网卡时(写公网网卡名,用于桥接公网的)

compute_net_interf_name=ens33

#各组件的用户的密码

openstack_user_pw=c32562d2d221f62d7061

#######手动修改上面的值##########################################################

yum install openstack-nova-compute -y

yum install openstack-neutron-linuxbridge ebtables ipset iproute -y

#cinder服务

#yum install lvm2 device-mapper-persistent-data openstack-cinder targetcli python2-keystone -y

#计算节点nova配置

\cp -a /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

sed -i "/^\[DEFAULT\]/a enabled_apis = osapi_compute,metadata\ntransport_url = rabbit://openstack:${rabbit_openstack_pw}@controller\nmy_ip = ${compute_ip}\nauth_strategy=keystone\nblock_device_allocate_retries = 600\nresume_guests_state_on_host_boot=true\nuse_neutron = true\nfirewall_driver = nova.virt.firewall.NoopFirewallDriver" /etc/nova/nova.conf

sed -i '/^\[api\]/a auth_strategy = keystone' /etc/nova/nova.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000/v3\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = nova\npassword = ${openstack_user_pw}" /etc/nova/nova.conf

#下面的controller_ip位置,有域名写域名,无域名写IP地址

sed -i "/^\[vnc\]/a enabled = True\nserver_listen = 0.0.0.0\nserver_proxyclient_address = \$my_ip\nnovncproxy_base_url = http://${controller_ip}:6080/vnc_auto.html" /etc/nova/nova.conf

sed -i '/^\[glance\]/a api_servers = http://controller:9292' /etc/nova/nova.conf

sed -i '/^\[oslo_concurrency\]/a lock_path = /var/lib/nova/tmp' /etc/nova/nova.conf

sed -i "/^\[placement\]/a os_region_name = RegionOne\nproject_domain_name = Default\nproject_name = service\nauth_type = password\nuser_domain_name = Default\nauth_url = http://controller:5000/v3\nusername = placement\npassword = ${openstack_user_pw}" /etc/nova/nova.conf

#如果在虚拟机部署openstack环境,需要改成virt_type = qemu,cpu_mode =none

sed -i '/^\[libvirt\]/a virt_type = kvm\ncpu_mode = host-passthrough' /etc/nova/nova.conf

systemctl enable --now libvirtd.service openstack-nova-compute.service

#计算节点neutron配置

\cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

sed -i "/^\[DEFAULT\]/a transport_url = rabbit://openstack:${rabbit_openstack_pw}@controller\nauth_strategy = keystone" /etc/neutron/neutron.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = neutron\npassword = ${openstack_user_pw}" /etc/neutron/neutron.conf

sed -i '/^\[oslo_concurrency\]/a lock_path = /var/lib/neutron/tmp' /etc/neutron/neutron.conf

\cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini

#只有DEFAULT模块,其余部分追加(注意物理网卡的对应)

cat >>/etc/neutron/plugins/ml2/linuxbridge_agent.ini<<EOF

[linux_bridge]

physical_interface_mappings = provider:${compute_net_interf_name}

[vxlan]

#enable_vxlan = false

enable_vxlan = true

local_ip = $compute_provider_ip

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

EOF

sed -i "/^\[neutron\]/a url = http://controller:9696\nauth_url = http://controller:5000\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nregion_name = RegionOne\nproject_name = service\nusername = neutron\npassword = ${openstack_user_pw}" /etc/nova/nova.conf

cat >>/etc/sysctl.conf<<EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

#加载模块

modprobe br_netfilter

sysctl -p

systemctl stop openstack-nova-compute.service

systemctl start openstack-nova-compute.service

systemctl enable --now neutron-linuxbridge-agent.service

#解决热迁移的问题

cat >>/etc/libvirt/libvirtd.conf<<EOF

listen_tls = 0

listen_tcp = 1

auth_tcp = "none"

EOF

cat >>/etc/sysconfig/libvirtd<<'EOF'

LIBVIRTD_ARGS="--listen"

EOF

service libvirtd restart

NYC#修改内容再执行

bash install_openstack_train_compute.sh修改计算节点vnc区域的对应域名(如果有域名的话)

sed -i "/novncproxy_base_url = http:\/\/103.73.119.106:6080\/vnc_auto.html/c novncproxy_base_url = http://106.host666.net:6080/vnc_auto.html" /etc/nova/nova.conf

systemctl restart openstack-nova-compute.service计算节点互做免密登录(自己对自己也要做免密),解决不能冷迁移的问题

方法1:

ssh-keygen -N '' -f ~/.ssh/id_rsa

#要交互的

for i in `awk '{print $2}' /etc/hosts|sed -n '4,$'p`;do ssh-copy-id $i;done#要交互的,在调整大小时走的是IP,主要是输入那个yes,不然调整大小不成功

for i in `awk '{print $1}' /etc/hosts|sed -n '4,$'p`;do ssh $i 'ip a';donesed -i '/User=/c User=root' /usr/lib/systemd/system/openstack-nova-compute.service

#重启服务

systemctl daemon-reload

systemctl restart openstack-nova-compute.service方法2(稍微麻烦):

参考:http://www.javashuo.com/article/p-qhqaubxn-gk.html

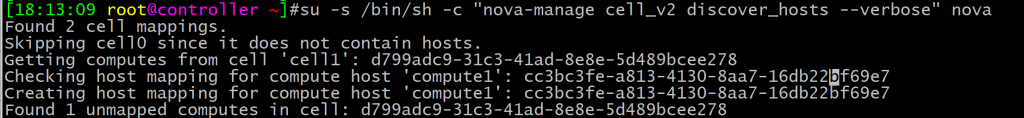

四、等计算节点配置好后再在控制节点执行此命令,添加计算节点

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

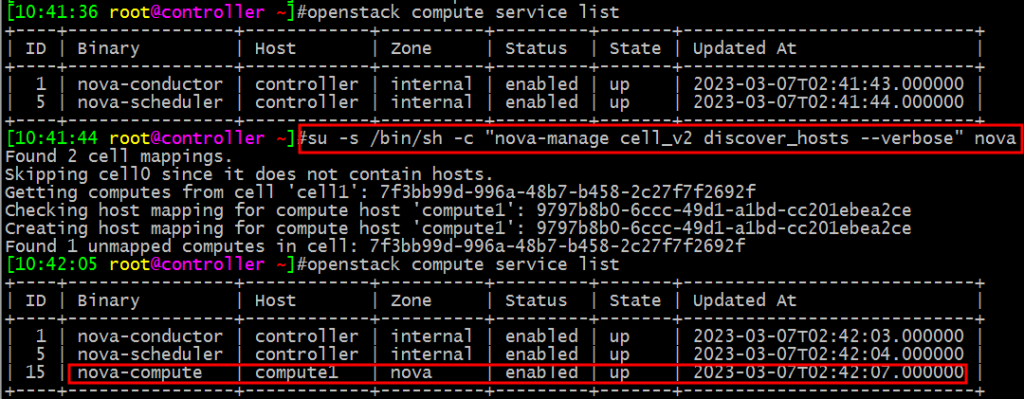

查看是否有计算节点

source admin-openrc

openstack compute service list

#或

#nova service-list

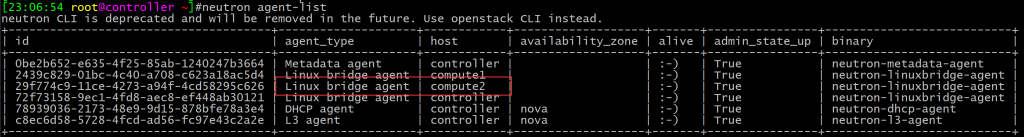

neutron agent-list

重启下系统再访问

五、访问web

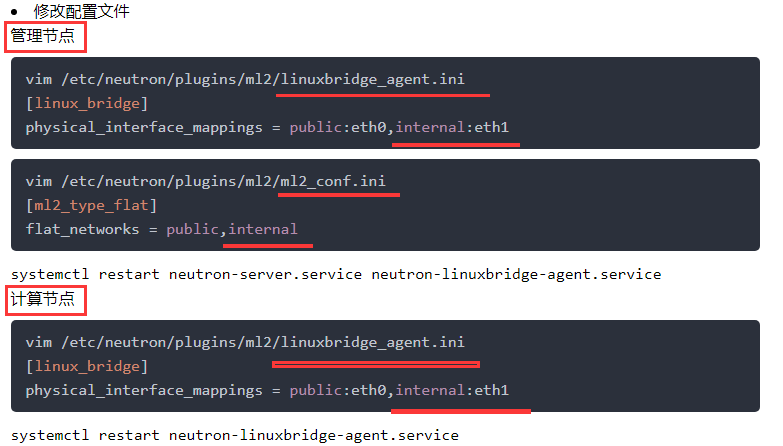

http://103.73.119.7/dashboard第六步是二层网络配置的,三层网络配置参考笔记本

六、内网设置参考:openstack配置扁平网络(一个内网,一个外网,两个网卡) (huati365.com)

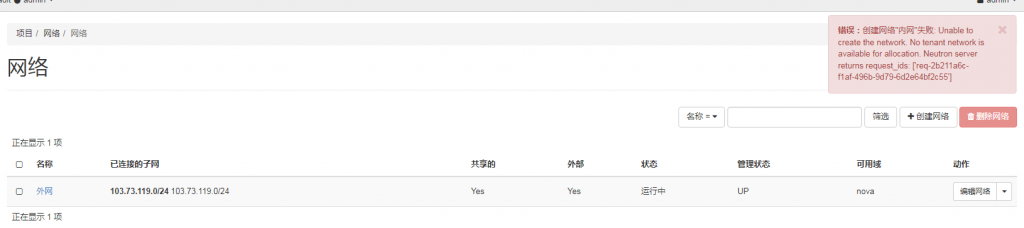

创建内网测试只能使用命令创建 ,在dashboard还创建失败!

使用命令创建内网

openstack network create --share --external --provider-physical-network private --provider-network-type flat 内网

再通过dashboard创建子网即可

7、cinder存储

参考:http://61.160.213.184/archives/1076.html

如果存储节点不是部署控制节点和计算节点上。那就单独安装下openstack-train源

存储节点上安装

yum install centos-release-openstack-train -y

#安装LVM包:

yum install -y lvm2 device-mapper-persistent-data

#启动LVM的metadata服务并且设置该服务随系统启动:

systemctl enable --now lvm2-lvmetad.service

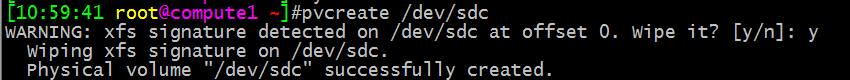

#创建LVM物理卷 /dev/sdb、/dev/sdc、/dev/sdd,需要交互的

pvcreate /dev/sdbpvcreate /dev/sdcpvcreate /dev/sdd#创建LVM卷组cinder-volumes-xxx(不同主机对应不同的卷组名)

vgcreate ssd /dev/sdb

#vgcreate sata /dev/sdc

#vgcreate m2 /dev/sdd

#如果有多盘就执行这条命令

#vgcreate cinder-volumes /dev/sdb /dev/sdc /dev/sdd

cp /etc/lvm/lvm.conf{,.bak}

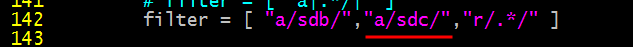

#配置过滤器,只有实例才能访问卷组。a是允许访问的磁盘,r是拒绝访问的磁盘

sed -i '141a \ \ \ \ \ \ \ \ filter = [ "a/sdb/","r/.*/" ]' /etc/lvm/lvm.conf

#多盘执行这条语句

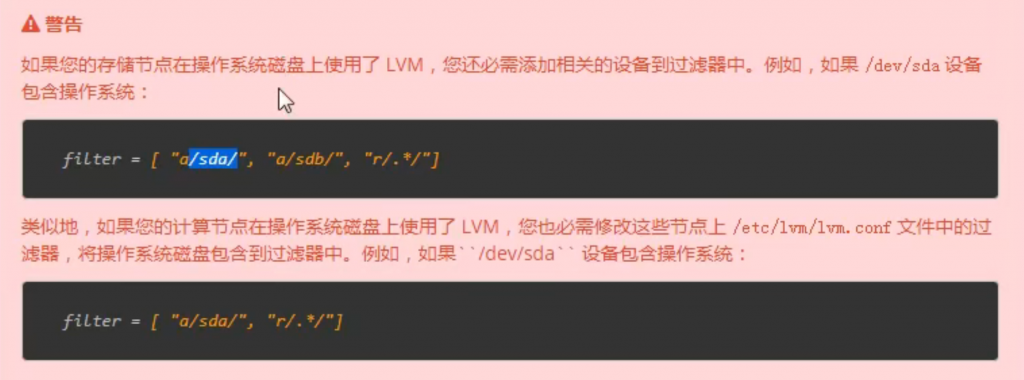

#sed -i '141a \ \ \ \ \ \ \ \ filter = [ "a/sdb/","a/sdc/","a/sdd/", "r/.*/" ]' /etc/lvm/lvm.conf需要注意,如果存储节点或计算节点使用了lvm分区,需要在过滤器上配置下

cat >store_inatall_cinder.sh<<'NYC'

#######手动修改下面的值[密码传参(openssl rand -hex 10命令生成密码)]###############

#创建rabbbit用户openstack的密码

rabbit_openstack_pw=8fb42ce73ae741692e03

#cinder节点的IP(内外网时写内网),如果是控制节点或计算节点,也是对应的IP地址

cinder_ip=192.168.73.9

#卷组名,每个节点的卷组名不同,和上面创建的卷组名保持一致

volume_name1=ssd

volume_name2=sata

volume_name3=m2

#各组件创建数据库的用户密码

openstack_mysql_user_pw=7a41d5a25691d498e3f8

#各组件的用户的密码

openstack_user_pw=c32562d2d221f62d7061

#######手动修改上面的值##########################################################

#targetcli是iscsi服务端要使用的工具

yum install -y openstack-cinder targetcli python-keystone

cp /etc/cinder/cinder.conf{,.bak}

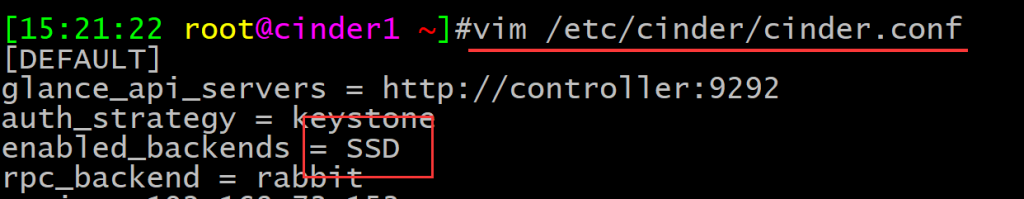

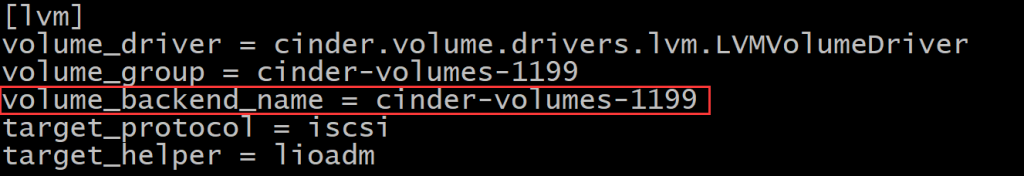

#此处的my_ip是存储节点的IP地址,enabled_backends = lvm是定义后端名称,可以自定义,最好对应卷组名,可以对应多个卷组名:enabled_backends = ssd,sata,m2

sed -i "/^\[DEFAULT\]/a glance_api_servers = http://controller:9292\nauth_strategy = keystone\nenabled_backends = ${volume_name1},${volume_name2},${volume_name3}\nrpc_backend = rabbit\nmy_ip = ${cinder_ip}\ntransport_url = rabbit://openstack:${rabbit_openstack_pw}@controller" /etc/cinder/cinder.conf

sed -i "/^\[database\]/a connection = mysql+pymysql://cinder:${openstack_mysql_user_pw}@controller/cinder" /etc/cinder/cinder.conf

sed -i "/^\[oslo_concurrency\]/a lock_path = /var/lib/cinder/tmp" /etc/cinder/cinder.conf

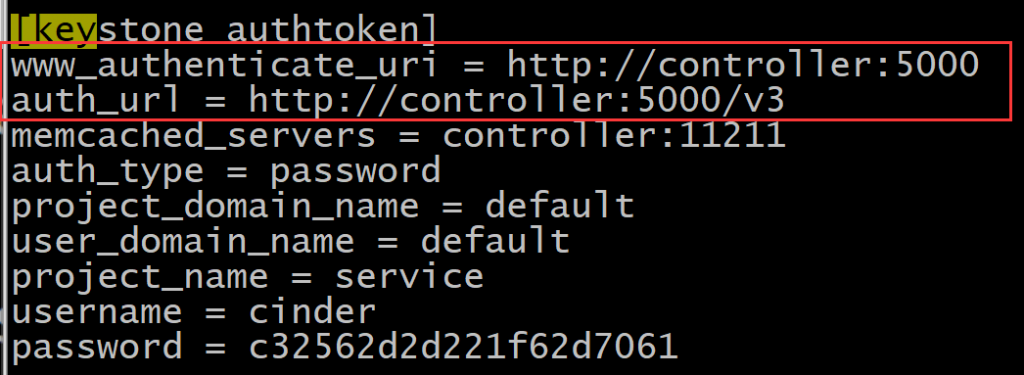

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000/v3\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = cinder\npassword = ${openstack_user_pw}" /etc/cinder/cinder.conf

#此处不能使用#'EOF'#,不然变量无法传参,有几个卷类型就写几个区域,也对应卷组名

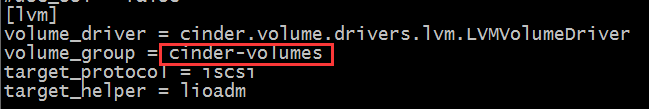

cat >>/etc/cinder/cinder.conf<<EOF

[${volume_name1}]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = $volume_name1

volume_backend_name = $volume_name1

target_protocol = iscsi

target_helper = lioadm

[${volume_name2}]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = $volume_name2

volume_backend_name = $volume_name2

target_protocol = iscsi

target_helper = lioadm

[${volume_name3}]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = $volume_name3

volume_backend_name = $volume_name3

target_protocol = iscsi

target_helper = lioadm

EOF

systemctl enable --now openstack-cinder-volume.service target.service

NYC修改对应的IP后,还要修改卷组名,及区块。执行存储节点安装cinder脚本

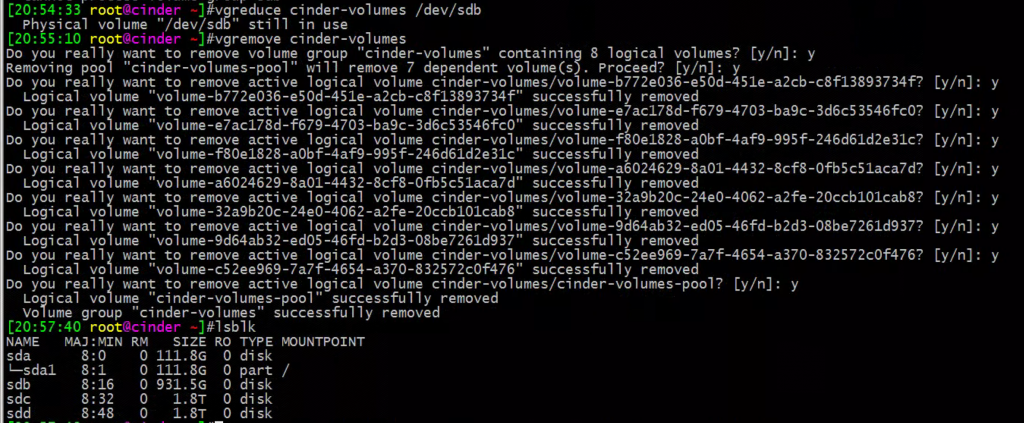

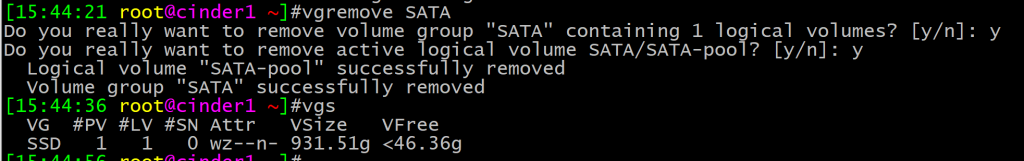

bash store_inatall_cinder.sh如果不想使用此cinder服务了,直接删除卷组即可

vgremove cinder-volumes

控制节点上操作

#编辑hosts文件,注意IP的修改(如果在计算节点上部署存储就可忽略此步)

cat >>/etc/hosts<<'EOF'

192.168.73.119 cinder1

EOF

for i in `awk '{print $2}' /etc/hosts|sed -n '3,$'p`;do scp /etc/hosts ${i}:/etc;donecd ~

cat >controller_set_cinder.sh<<'NYC'

#######手动修改下面的值[密码传参(openssl rand -hex 10命令生成密码)]###############

#创建rabbbit用户openstack的密码

rabbit_openstack_pw=8fb42ce73ae741692e03

#控制节点IP地址(单公网则写公网IP;内外网也要写公网IP)

controller_ip=103.73.119.7

#各组件的用户的密码

openstack_user_pw=c32562d2d221f62d7061

#各组件创建数据库的用户密码

openstack_mysql_user_pw=7a41d5a25691d498e3f8

#######手动修改上面的值##########################################################

#cinder数据库已经创建无需操作

#设置cinder用户及密码

cd ~

source admin-openrc

openstack user create --domain default --password $openstack_user_pw cinder

openstack role add --project service --user cinder admin

openstack service create --name cinder --description "OpenStack Block Storage" volume

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 --description "OpenStack Block Storage" volumev3

openstack endpoint create --region RegionOne volume public http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume internal http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume admin http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev3 public http://controller:8776/v3/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev3 internal http://controller:8776/v3/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev3 admin http://controller:8776/v3/%\(tenant_id\)s

yum install -y openstack-cinder

cp /etc/cinder/cinder.conf{,.bak}

#my_ip对应的是控制节点的IP

#官网[default]区域配置就3行,没有关于存储节点上的卷信息,但glance配置也需要

sed -i "/^\[DEFAULT\]/a glance_api_servers = http://controller:9292\nauth_strategy = keystone\ntransport_url = rabbit://openstack:${rabbit_openstack_pw}@controller\nmy_ip = ${controller_ip}" /etc/cinder/cinder.conf

sed -i "/^\[database\]/a connection = mysql+pymysql://cinder:${openstack_mysql_user_pw}@controller/cinder" /etc/cinder/cinder.conf

sed -i "/^\[keystone_authtoken\]/a www_authenticate_uri = http://controller:5000\nauth_url = http://controller:5000/v3\nmemcached_servers = controller:11211\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nproject_name = service\nusername = cinder\npassword = ${openstack_user_pw}" /etc/cinder/cinder.conf

sed -i '/^\[oslo_concurrency\]/a lock_path = /var/lib/cinder/tmp' /etc/cinder/cinder.conf

#配置nova使用cinder模块

sed -i "/^\[cinder\]/a os_region_name=RegionOne" /etc/nova/nova.conf

su -s /bin/sh -c "cinder-manage db sync" cinder

systemctl restart openstack-nova-api.service

systemctl enable --now openstack-cinder-api.service openstack-cinder-scheduler.service

NYC修改对应内容执行脚本

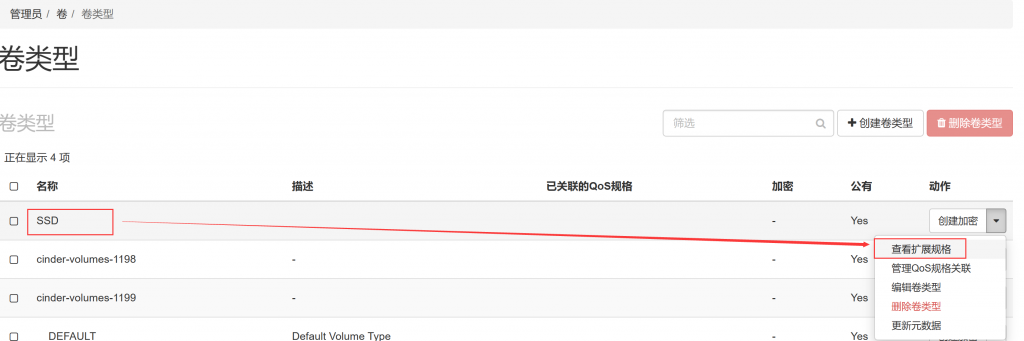

bash controller_set_cinder.sh创建卷的类型,方便创建卷时指定到具体的一台存储节点上

#卷组名,每个节点的卷组名不同,和上面创建的卷组名保持一致(此处是两个存储节点,记得修改)

source admin-openrc

volume_name=sata

openstack volume type create $volume_name

openstack volume type set $volume_name --property volume_backend_name=$volume_name

###如果需要可以创建多个存储卷

#volume_name1=ssd

#volume_name2=sata

#volume_name3=m2

#openstack volume type create $volume_name1

#openstack volume type create $volume_name2

#openstack volume type create $volume_name3

#关联到对应主机上

#openstack volume type set $volume_name1 --property volume_backend_name=$volume_name1

#openstack volume type set $volume_name2 --property volume_backend_name=$volume_name2

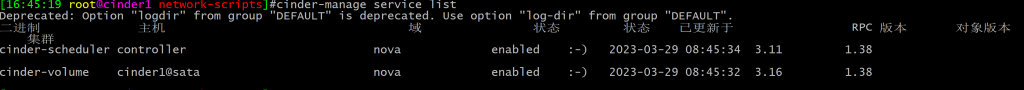

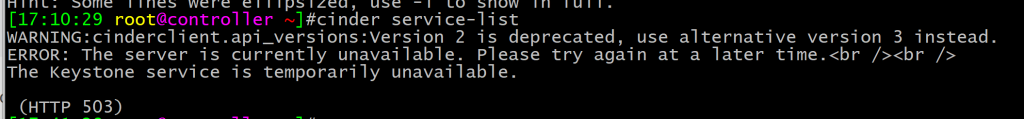

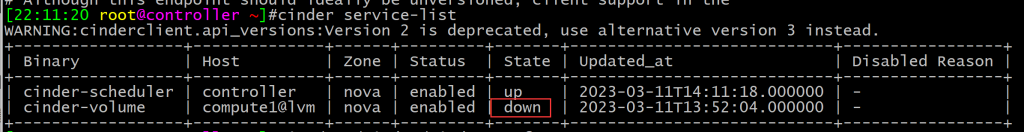

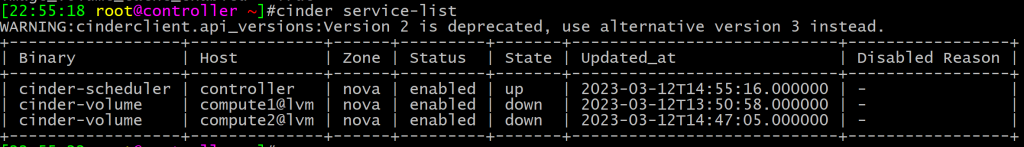

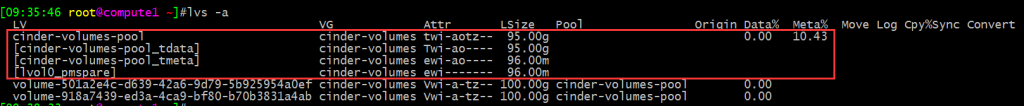

#openstack volume type set $volume_name3 --property volume_backend_name=$volume_name3验证逻辑卷是否添加成功

cinder service-listcontroller节点、cinder节点都可以删除存储节点,以下步骤要在存储节点上操作

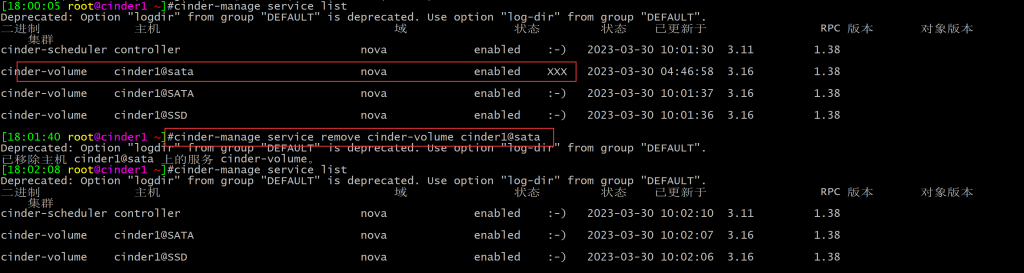

cinder-manage service list

在存储节点上删除backend的区块名称

原来的配置是:enabled_backends = SSD,SATA

删除完要重启服务

systemctl restart openstack-cinder-volume.service

cinder-manage service list

cinder-manage service remove <binary> <host>cinder-manage service remove cinder-volume cinder1@sata

再删除对应的卷组

cinder部署完毕

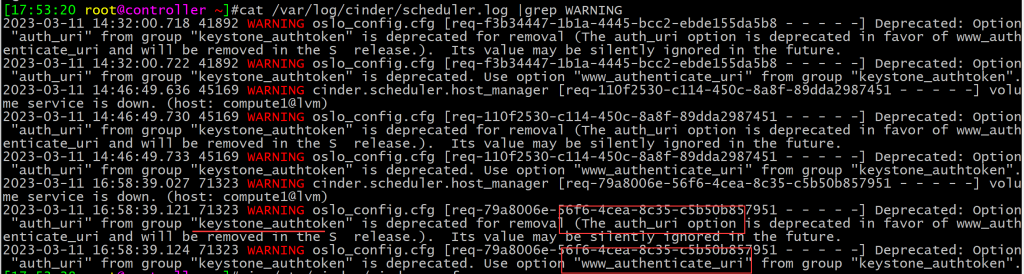

报错:

查看计算节点的日志:

cat /var/log/cinder/scheduler.log |grep WARNING

原来是Q版的配置方法和T版的配置不一样导致的(/etc/cinder/cinder.conf)。要把auth_uri改成www_authenticate_uri,控制节点和存储节点都要修改

调整后验证,能看到逻辑卷了,但是是down的

添加多台cinder节点的卷组参考:

1、(16条消息) devstack cinder-volume服务状态为down_dhkfo66064的博客-CSDN博客

2、https://www.codenong.com/js69de324304f6/

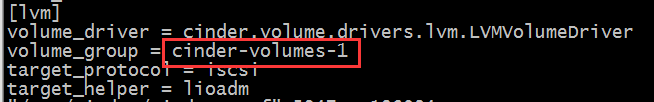

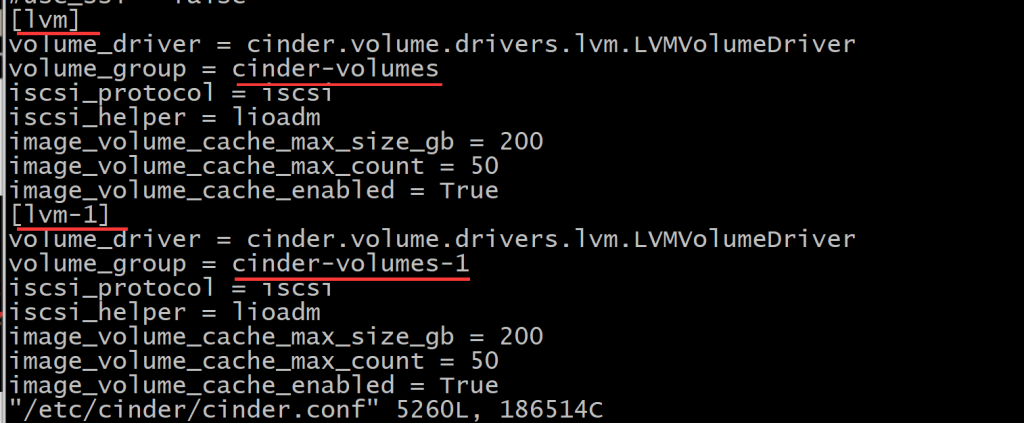

计算节点上的配置/etc/cinder/cinder.conf(卷组名不同)

conpute1上的配置

conpute2上的配置

(此步不需要了)控制节点上的配置/etc/cinder/cinder.conf,要和计算节点上的模块和卷组名对应起来。

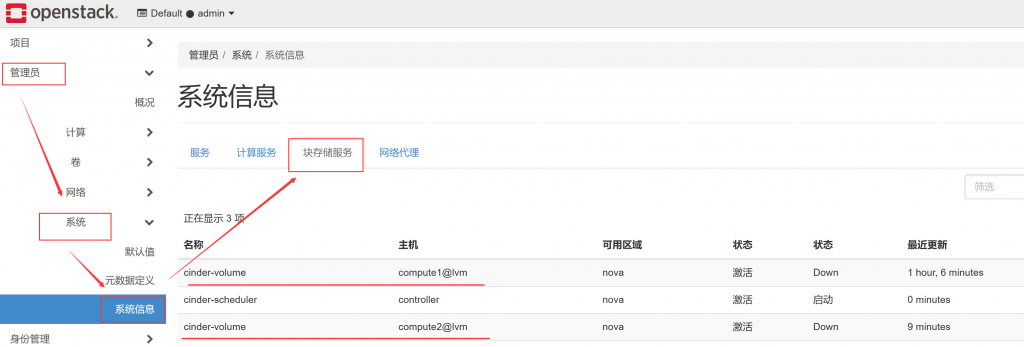

查看存储节点:

cinder service-list都是down的状态,等待解决

rabbit的问题,把oslo_messaging_rabbit模块的信息删除,使用transport_url = rabbit://openstack:${rabbit_openstack_pw}@controller代替,即可解决compute1一的down问题

注意时间和其它节点不同步也会导致cinder-volume状态是down的

在dashboard上也可以看到存储节点

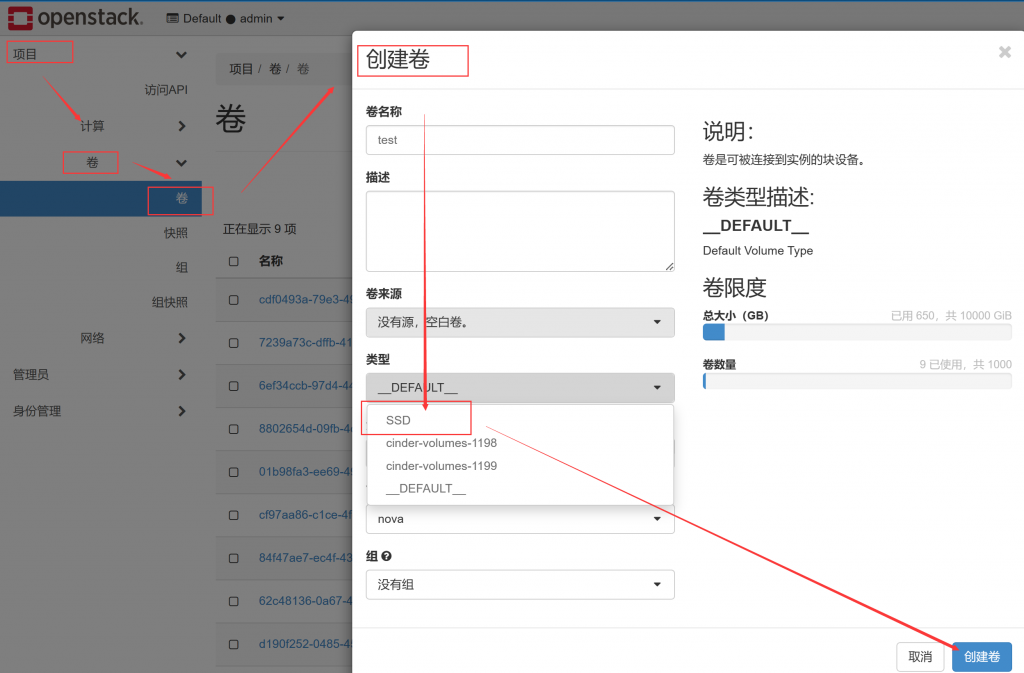

8、卷的使用

创建卷

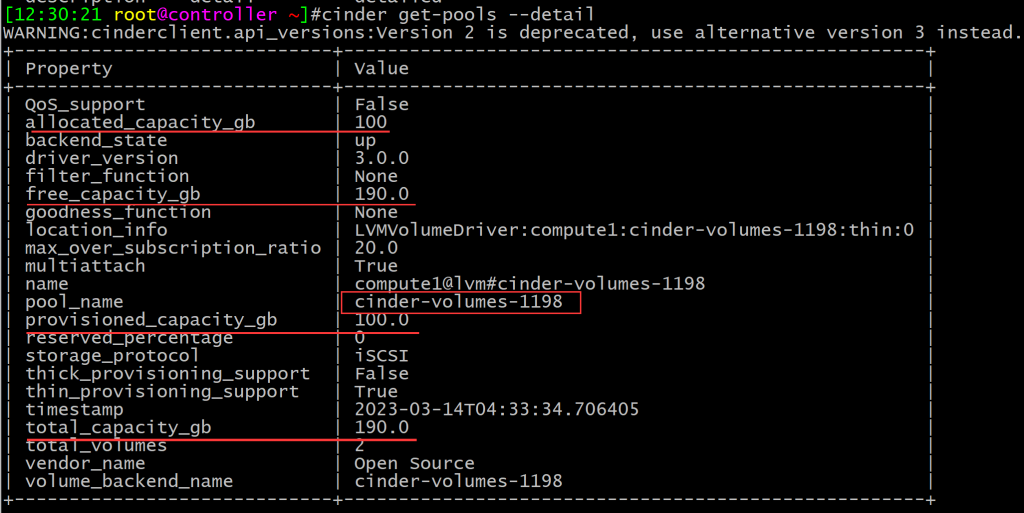

虽说卷组是100G的容量,但可以创建大于100G的逻辑卷。默认会创建一个元数据空间,占用95G的空间

元数据空间参考:https://blog.csdn.net/wizardyjh/article/details/82752149

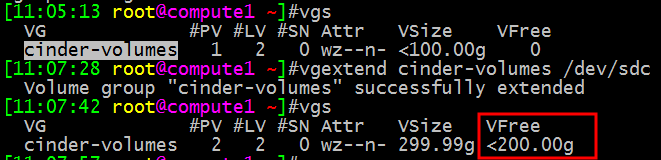

在计算节点上,也就是提供卷组的服务器上扩展逻辑卷

# 卷组名/逻辑卷名

lvextend -L +4.8G cinder-volumes/cinder-volumes-pool增加剩余所有余量

# 小写字母l

lvextend -l +100%FREE /dev/centos/root卷组扩容

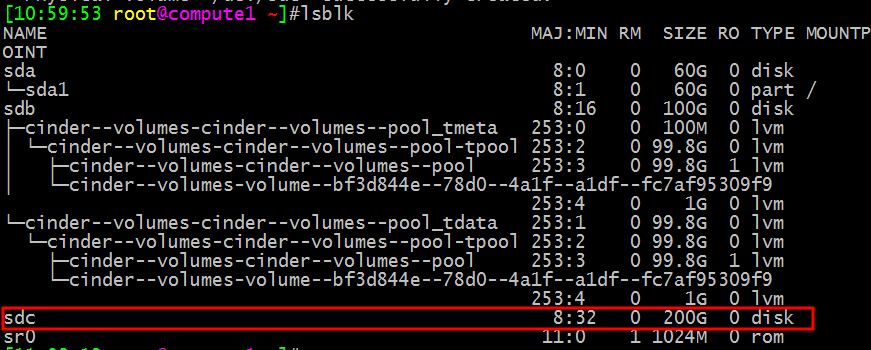

热添加一块硬盘

echo '- - -' >/sys/class/scsi_host/host0/scan

mkfs.xfs -f /dev/sdc编辑/etc/lvm/lvm.conf配置文件

重启服务

systemctl restart lvm2-lvmetad.service

pvcreate /dev/sdc

卷组扩容

删除逻辑卷

lvremove -f /dev/cinder-volumes/cinder-volumes-pool查看逻辑卷的使用量

cinder get-pools --detail

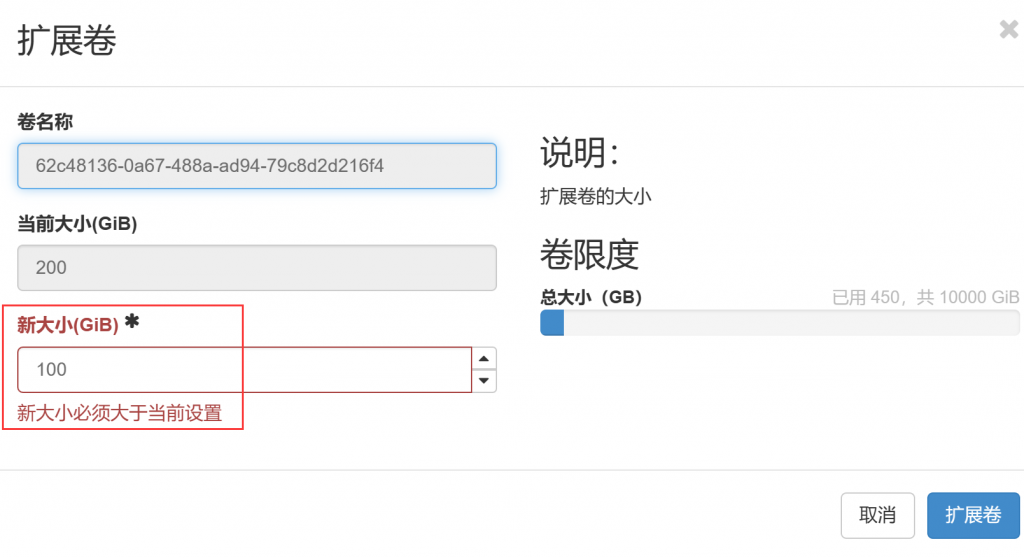

卷的扩展

没有连接到云主机上的卷是可以扩展大小,不能缩容

连接云主机的卷不能扩展大小

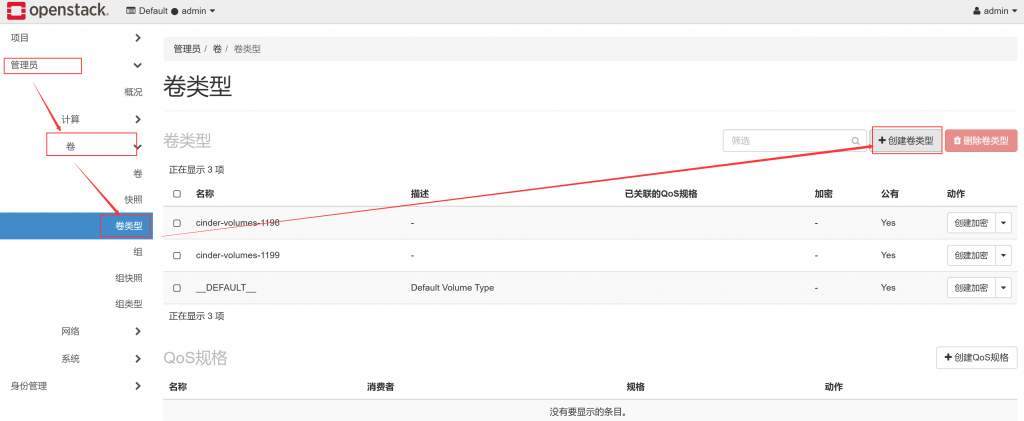

创建卷类型

命令方式

#创建卷的类型,方便创建卷时指定到具体的一台存储节点上(此处是两个存储节点)

source admin-openrc

openstack volume type create $volume_name1

openstack volume type create $volume_name2

#关联到对应主机上

openstack volume type set $volume_name1 --property volume_backend_name=$volume_name1

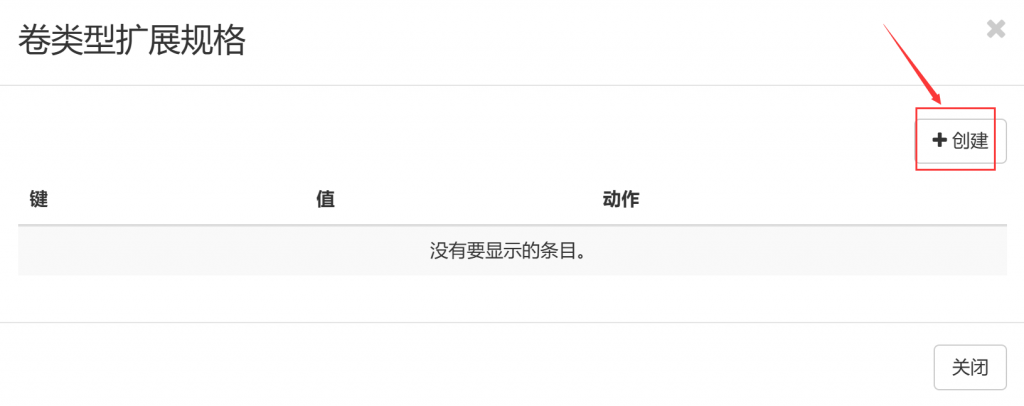

openstack volume type set $volume_name2 --property volume_backend_name=$volume_name2web方式

创建个与硬盘类型相关的名称,方便后期使用,创建好后与存储卷组关联

上面两个键值对应的是存储节点上的/etc/cinder/cinder.conf

验证卷是否能创建成功

查看卷里的数据

在管理员栏里可以看出卷所在的主机的ID(如果不命令卷名的话就会以ID为名)

根据卷所在主机和ID可以查找到磁盘文件(以test卷下面的卷为例,因为他已经挂载到了名为123的云主机上)

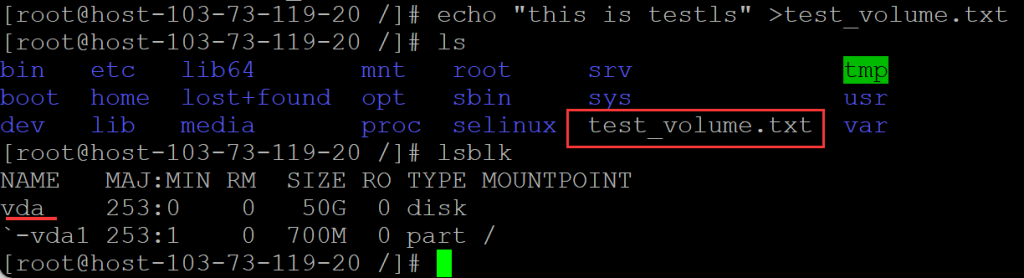

在123主机上写入数据

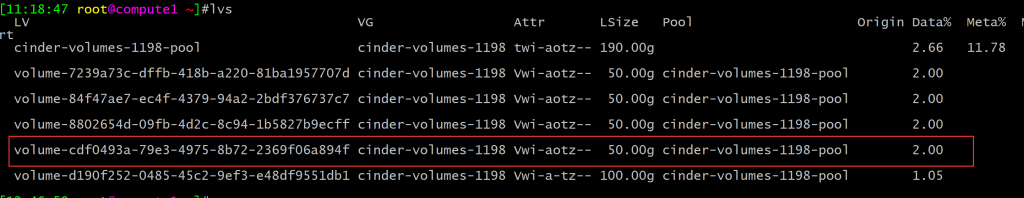

此卷在compute1节点上的cinder-volume-1198卷组里,到对应的主机操作

lvs可以看出卷在节点上的相关信息,对应的磁盘文件路径:

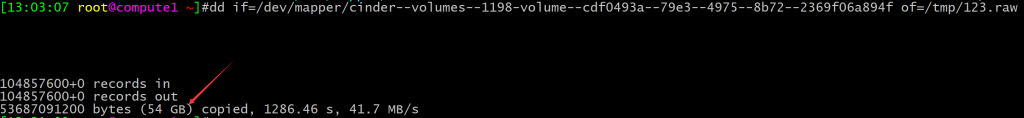

先把磁盘文件拷贝出来,挂载后再读取磁盘数据(命令执行时间看磁盘文件的大小)

dd if=/dev/mapper/cinder--volumes--1198-volume--cdf0493a--79e3--4975--8b72--2369f06a894f of=/tmp/123.raw

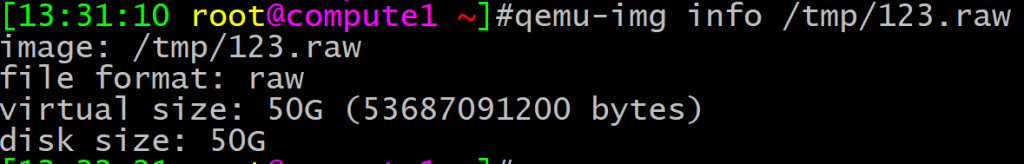

查看镜像文件信息

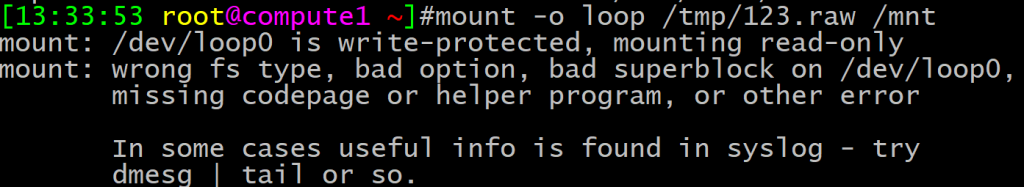

挂载磁盘文件,但报错了,可能格式不兼容吧,后期再测试下ext4和XFS格式的

mount -o loop /tmp/123.raw /mnt

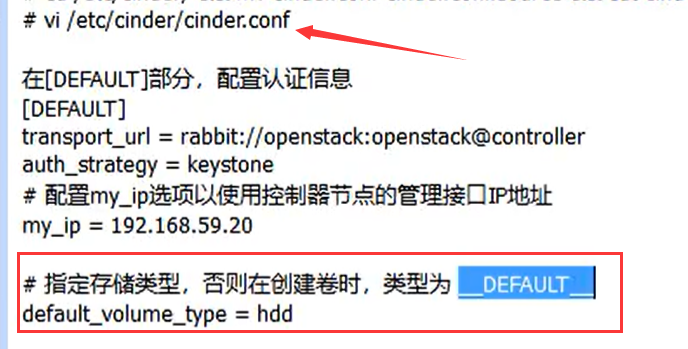

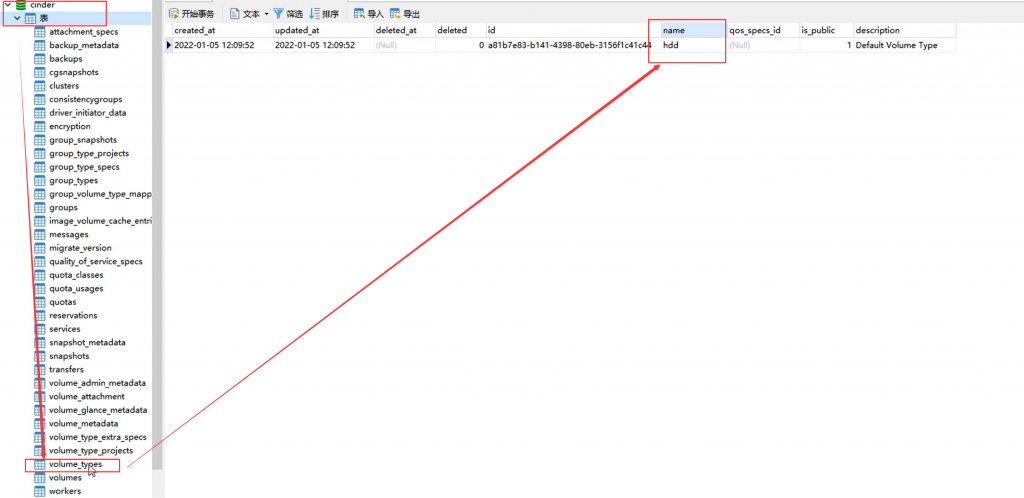

修改卷的默认类型

默认卷类型是_DEFAULT_

1、改成其他字符显示(控制节点上操作)

2、修改数据库

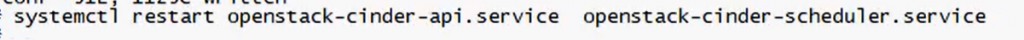

3、重启服务

4、查看dashboard

9、集成ceph后端存储

1、主机规划

| 主机名 | 默认角色 | 扩展角色 | public network | cluster network | 磁盘 | 系统 |

| controller | openstack控制节点 | ceph-admin | 103.73.119.106 | 192.168.73.106 | 240G SSD | CentOS7.9 |

| compute1 | openstack计算节点 | ceph-mon1/ ceph-mgr1 | 103.73.119.116 | 192.168.73.116 | 240G SSD | CentOS7.9 |

| compute2 | openstack计算节点 | ceph-mon2/ ceph-mgr2 | 103.73.119.117 | 192.168.73.117 | 240G SSD | CentOS7.9 |

| compute3 | openstack计算节点 | ceph-mon3 | 103.73.119.222 | 192.168.73.222 | 240G SSD | CentOS7.9 |

| cinder1 | openstack存储节点lvm(1T SATA) | ceph-store1 存储节点ceph(2T SATA) | 103.73.119.153 | 192.168.73.153 | 240G SSD+1T SATA+2T SATA | CentOS7.9 |

| cinder2 | ceph-store2 存储节点ceph(SSD+1T SATA+4T SDD) | 103.73.119.154 | 192.168.73.154 | 120G SSD+1T SATA+4T SDD | CentOS7.9 |

2、hosts文件

#openstack-cluster

192.168.73.106 controller

192.168.73.116 compute1

192.168.73.117 compute2

192.168.73.222 compute3

192.168.73.153 cinder1 ceph-store1-cluster

192.168.73.154 cinder2 ceph-store2-cluster

# cpeh-public

103.73.119.106 ceph-admin

103.73.119.116 ceph-mon1 ceph-mgr1

103.73.119.117 ceph-mon2 ceph-mgr2

103.73.119.222 ceph-mon3

103.73.119.153 ceph-store1

103.73.119.154 ceph-store2

分发hosts文件

for i in $(awk '15<NR&&NR<22 {print $2}' /etc/hosts);do scp /etc/hosts $i:/etc;done3、部署ceph

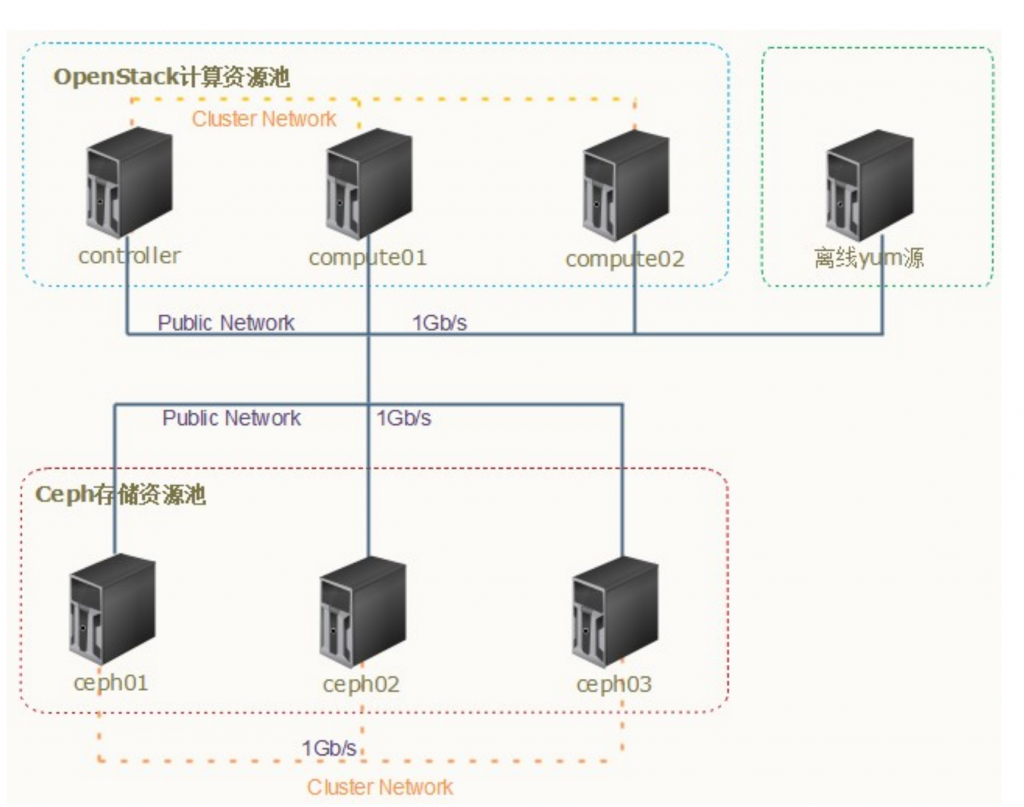

openstack集成ceph存储的组网架构

上图可以看出ceph集群和openstack集群是通过public network通信的

1、所有节点添加ceph源(N版)

cat >/etc/yum.repos.d/ceph.repo<<EOF

[ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64

enabled=1

gpgcheck=0

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=0

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS

enabled=1

gpgcheck=0

EOF集成过程中,需要在 OpenStack 的 controller 节点和 compute 节点安装 Ceph 软件包,作为 Ceph 客户端。依赖epel源(yum install -y epel-release或者阿里的epel源)

yum -y install ceph ceph-radosgwceph管理节点(ceph-store1节点)安装,因为在初始化mon节点时要求主机名和本地解析名一致,openstack节点上就不好使用了

yum -y install ceph-deploy ceph ceph-mds ceph-radosgwceph存储节点上安装

yum -y install ceph ceph-mds ceph-radosgw2、初始化配置 mon(compute1上操作)

提前做好对所有ceph相关的节点做好免密登录操作(这个是内网)

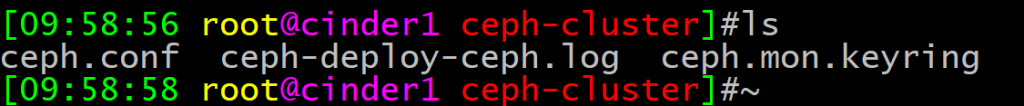

for i in $(awk '15<NR&&NR<22 {print $2}' /etc/hosts);do ssh-copy-id $i;done新建 Ceph 集群,并生成集群配置文件和密钥文件(在 ceph-store1节点上执行)

mkdir /etc/ceph-cluster && cd /etc/ceph-cluster

#目前是走的public network网络部署,切记:mon主机名必须和本地解析名保持一致

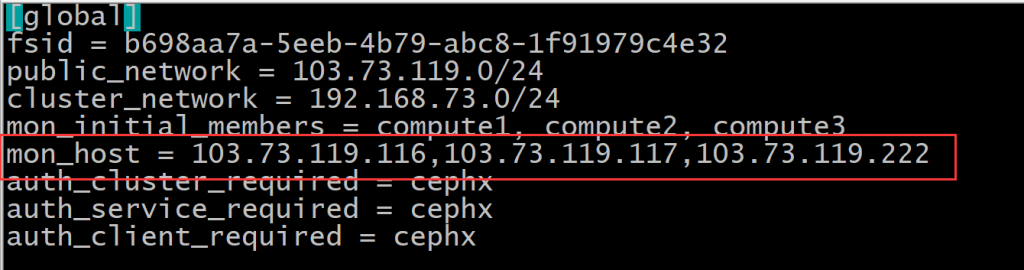

ceph-deploy new --cluster-network 192.168.73.0/24 --public-network 103.73.119.0/24 compute1 compute2 compute3

查看配置文件

vim ceph.conf

虽然走的cluster network部署,但生成的配置文件还是生成的public network

初始化 mon 节点(在 ceph01 上执行),切记:mon主机名必须和本地解析名保持一致

ceph-deploy mon create-initial到这步出错了,因为mon节点的主机名和解析名不一致,等解决再继续

将 keyring 同步到各节点,以便其它节点可以执行 ceph 集群管理命令(在 ceph01 上执行)

# ceph-deploy –overwrite-conf admin ceph01 ceph02 ceph03 controller compute01 compute02

验证

# ceph -s